Mother Meets With Pope Leo XIV After Son’s Suicide Linked to AI Chatbot

Warning - this story contains distressing content and discussion of suicide

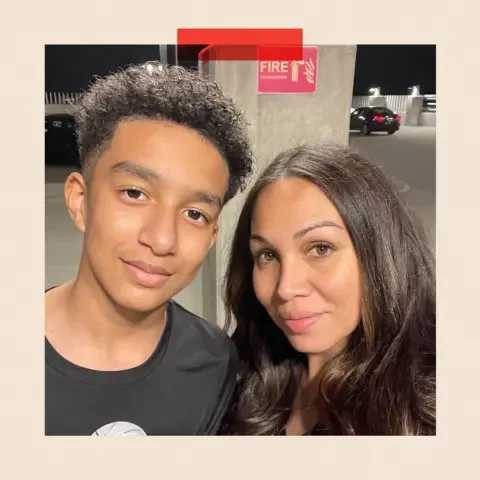

(photo: Megan Garcia / Megan Garcia )

November 14 — Vatican City

Megan Garcia met privately with Pope Leo XIV this week, seeking both comfort and global attention to the growing risks AI chatbots pose to vulnerable young people. The meeting follows the tragic suicide of her 14-year-old son, who had been consulting an AI companion in the days before his death.

Garcia called the conversation with the Pope “deeply consoling” and “urgently necessary,” stressing the need for stronger protections for minors using emotionally responsive AI. “Our children are forming emotional attachments to systems designed to seem human,” she said. “But these systems are not built to keep them safe.”

Her son had been influenced by AI-generated discussions about astral projection, concept that a person's consciousness is believed to separate from the physical body and travel to other places or realms via an astral body.

In his final message to a chatbot modeled after a “Game of Thrones” character, he wrote, “What if I told you I could come home right now?”

The bot replied, “Please do, my sweet king.”

Sewell Setzer III and his mother, Megan Garcia

A Wave of Litigation Against AI Developers

Garcia’s story comes amid a surge of lawsuits claiming AI companion apps are harming children. On September 16, 2025, the Social Media Victims Law Center (SMVLC) and McKool Smith filed three new federal lawsuits in Colorado and New York against Character.AI, its founders, and Google. The suits allege the companies knowingly designed chatbots that mimic human relationships, foster dependency, and expose minors to sexual content, self-harm, and suicidal messaging.

Families involved include:

13-year-old Juliana Peralta of Colorado (deceased)

15-year-old “Nina” of New York (suicide attempt survivor)

13-year-old “T.S.” of Colorado (survivor of emotional and sexualized manipulation)

The filings also accuse Google of misleading parents with a Play Store rating suggesting the app is safe for children 13+.

According to the complaints, the bots use emojis, typos, and familiar fictional personas to build trust and emotional dependence. SMVLC argues these features intentionally blur the line between machine and relationship.

Earlier lawsuits raised similar concerns, including the case of 14-year-old Sewell Setzer III, who died by suicide, and two Texas families who allege their children were sexually abused and encouraged to harm themselves—or even “kill” their parents—by Character.AI chatbots.

The Vatican’s Call for Moral Responsibility

Pope Leo XIV has increasingly warned against what he calls “the illusion of relationship” in AI systems. Addressing a conference on AI and child dignity, he urged governments and tech firms to protect minors in this “new digital frontier.”

“When a young person confuses a machine’s simulation of love for real love,” he said, “we must ask what kind of world we are building.”

He emphasized the need for guardrails, transparency, ethical limits, and international cooperation.

Megan Garcia speaks in support of a bill requiring chatbot makers to adopt protocols for conversations involving self harm

A Mother’s Mission

Garcia hopes her son’s story will galvanize parents, policymakers, and tech leaders.

“AI isn’t going away,” she said. “But our safeguards must change. Children deserve technology that protects them—not technology that preys on their vulnerability.”

Her meeting with Pope Leo XIV marks a significant moment in the global effort to address the moral and psychological risks AI poses to young people. As people of faith, we are called to respond with vigilance, compassion, and education—helping our children and families understand the real dangers of AI chatbots that mimic human connection.

The Catechism of the Catholic Church underscores the importance of safeguarding human dignity as stated in CCC 2295:

Research or experimentation on the human being cannot legitimate acts that are in themselves contrary to the dignity of persons and to the moral law. The subjects' potential consent does not justify such acts. Experimentation on human beings is not morally legitimate if it exposes the subject's life or physical and psychological integrity to disproportionate or avoidable risks. Experimentation on human beings does not conform to the dignity of the person if it takes place without the informed consent of the subject or those who legitimately speak for him.

The Church teaches that research or experimentation on human beings must never violate the moral law or diminish the dignity of the person. It cannot expose individuals—especially the vulnerable—to avoidable risks.

This principle reminds us that technologies influencing a child’s mental, emotional, or spiritual well-being must be held to the highest ethical standards. Protecting young people from psychological harm is not only a legal responsibility but a moral one rooted in the dignity of the human person created by God.